Carathéodory's theorem (convex hull)

- See also Carathéodory's theorem for other meanings

In convex geometry Carathéodory's theorem states that if a point x of Rd lies in the convex hull of a set P, there is a subset P′ of P consisting of d+1 or fewer points such that x lies in the convex hull of P′. Equivalently, x lies in an r-simplex with vertices in P, where  . The result is named for Constantin Carathéodory, who proved the theorem in 1911 for the case when P is compact. In 1914 Ernst Steinitz expanded Carathéodory's theorem for any sets P in Rd.

. The result is named for Constantin Carathéodory, who proved the theorem in 1911 for the case when P is compact. In 1914 Ernst Steinitz expanded Carathéodory's theorem for any sets P in Rd.

For example, consider a set P = {(0,0), (0,1), (1,0), (1,1)} which is a subset of R2. The convex hull of this set is a square. Consider now a point x = (1/4, 1/4), which is in the convex hull of P. We can then construct a set {(0,0),(0,1),(1,0)} = P ′, the convex hull of which is a triangle and encloses x, and thus the theorem works for this instance, since |P′| = 3. It may help to visualise Carathéodory's theorem in 2 dimensions, as saying that we can construct a triangle consisting of points from P that encloses any point in P.

Contents |

Proof

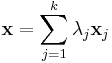

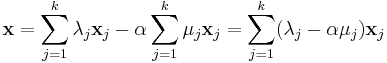

Let x be a point in the convex hull of P. Then, x is a convex combination of a finite number of points in P :

where every xj is in P, every λj is positive, and  .

.

Suppose k > d + 1 (otherwise, there is nothing to prove). Then, the points x2 − x1, ..., xk − x1 are linearly dependent,

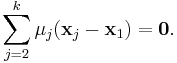

so there are real scalars μ2, ..., μk, not all zero, such that

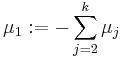

If μ1 is defined as

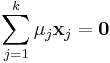

then

and not all of the μj are equal to zero. Therefore, at least one μj>0. Then,

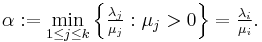

for any real α. In particular, the equality will hold if α is defined as

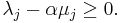

Note that α>0, and for every j between 1 and k,

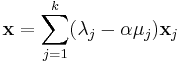

In particular, λi − αμi = 0 by definition of α. Therefore,

where every  is nonnegative, their sum is one , and furthermore,

is nonnegative, their sum is one , and furthermore,  . In other words, x is represented as a convex combination of at most k-1 points of P. This process can be repeated until x is represented as a convex combination of at most d + 1 points in P.

. In other words, x is represented as a convex combination of at most k-1 points of P. This process can be repeated until x is represented as a convex combination of at most d + 1 points in P.

An alternative proof uses Helly's theorem.

See also

References

- Carathéodory, C. (1911), "Über den Variabilitätsbereich der Fourierschen Konstanten von positiven harmonischen Funktionen", Rendiconti del Circolo Matematico di Palermo 32: 193–217, doi:10.1007/BF03014795.

- Danzer, L.; Grünbaum, B.; Klee, V. (1963), "Helly's theorem and its relatives", Convexity, Proc. Symp. Pure Math., 7, American Mathematical Society, pp. 101–179.

- Eckhoff, J. (1993), "Helly, Radon, and Carathéodory type theorems", Handbook of Convex Geometry, A, B, Amsterdam: North-Holland, pp. 389–448.

- Steinitz, Ernst (1913), "Bedingt konvergente Reihen und konvexe Systeme", J. Reine Angew. Math. 143 (143): 128–175, doi:10.1515/crll.1913.143.128.

External links

- Concise statement of theorem in terms of convex hulls (at PlanetMath)